Infinite Playgrounds: The Building of AI-Native Worlds (Part I)

We are moving toward games that aren't just played, but lived. Worlds that don't just react, but remember, evolve, and dream alongside us.

This special edition is a collaboration with my friend Yondon Fu. We bonded instantly over our compulsive drive to tinker at the edge—most recently, diving into new consumer frontiers unlocked by AI. Yondon brings deep technical expertise from his experience as co-founder and Head of Engineering at Livepeer, where he architected decentralized media protocols that power millions of video streams daily. Together, we've been imagining the transformative experiences possible when cutting-edge AI intersects with one of our favorite media, gaming.

Gaming began as pixelated wish-fulfillment, limited by hardware yet boundless in imagination. Each technological "no" was met with an artist’s defiant "yes, but what if?" Now, at the threshold of AI-native worlds, we’re about to transcend those limits entirely, venturing into infinite playgrounds that evolve as swiftly as our dreams.

Fast forward to today, games have exploded into boundless forms: part theater, part recursive universe, part psychological mirror. AAA studios sculpt worlds the size of continents; indie creators conjure myths from dorm rooms.

As avid gamers, we find ourselves dreaming of that elusive “perfect game” once again, now tantalizingly within reach through AI. They are ever more expansive, personalized, and generative. And it won’t merely be a fresh coat of paint or an incremental upgrade to existing engines, but rather reweave the very fabric of creation and reshape the contours of experience, destabilizing familiar roles for both developers and players alike. What is left to be redefined are storytelling, agency, and imagination itself, boldly testing and transcending the limits of our current infrastructure.

What Does an AI-Enabled Game Look Like Today, and Tomorrow?

Today, AI-enabled gaming teeters intriguingly between experimental promise and practical reality. Platforms like Rosebud.ai showcase how mini-games can be rapidly crafted with AI’s assistance, resulting in low-cost productions that, while high in creative variance, typically face steep user retention challenges and distinct power-law dynamics.

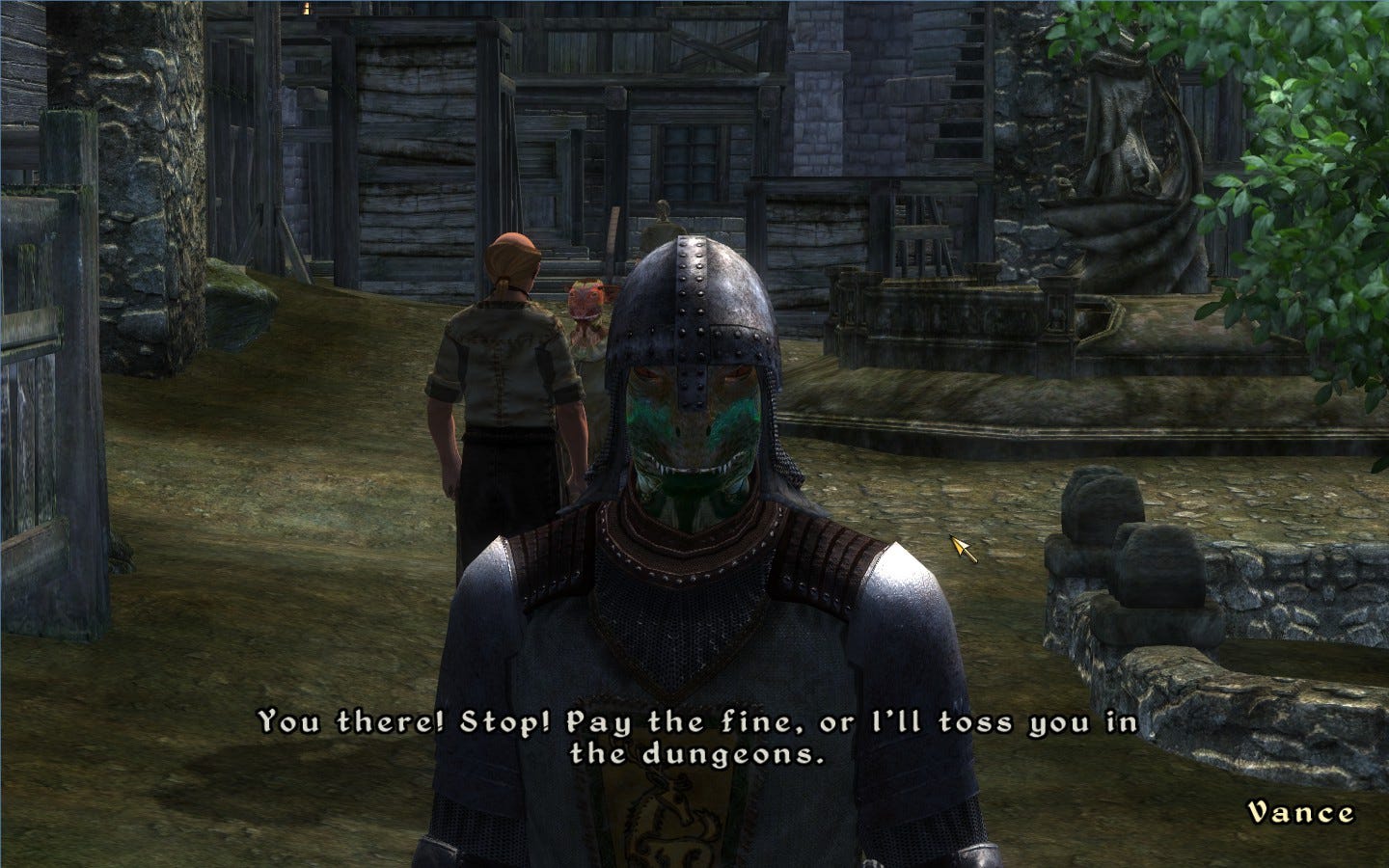

On the other side of the spectrum are games crafted meticulously using traditional tools like Blender or Unreal Engine, which embody AAA production readiness, are richly immersive, yet demand significant expertise and technical investments.

The true creative frontier lies in the liminal space between, marrying the speed and flexibility of generative production with experiences people want to revisit, linger in, and hold close. This middle ground is misty, unmapped, an expanse of questions and untapped forms.

Here are some possibilities:

AI-crafted narrative worlds that evolve endlessly.

Imagine vast, intricate universes where storylines dynamically shift based on the collective decisions and individual choices of millions of players. AI can analyze player interactions and craft complex narrative pathways in real-time, making every journey uniquely personal yet interconnected. These worlds would continuously evolve without human scripting, offering endless replayability and deep narrative immersion that responds fluidly to emergent player-driven scenarios.

In an AI-native MMO inspired by Dwarf Fortress, every player's action, from planting a tree to starting a war, subtly alters the world’s history. The AI not only generates lore but also weaves ongoing geopolitical drama, emergent factions, and historical “what-ifs.”

Such systems rely on multi-agent world simulation, using LLMs for dynamic dialogue, adaptive NPC behaviors, and track social and economic relationships. A persistent narrative engine digests logs, infers meaning, and continually seeds future conflicts and mysteries, surfacing rich, unexpected twists that even devs didn’t directly design.

Gameplay that morphs to you in real time

Unlike ever-expanding AI-generated worlds, adaptive gameplay loops are about moment-to-moment change: the core mechanics, challenges, and pacing shift in direct response to your unique style and needs. Here, the AI becomes an omnipresent “game master,” learning from your decisions and tuning the rules of play to keep you on the knife’s edge of engagement.

If you solve challenges effortlessly, the game senses complacency and amps up complexity on the fly. If you’re struggling, the AI discreetly lowers the difficulty curve, offers context-sensitive hints, or even reconfigures level layouts to ease frustration. The gameplay experience as a result should feel “just challenging enough,” neither too punishing enough to keep the player in flow state. Unless, the difficulty of the game alone is a feature (looking at all the tortured souls of Souls players).

Edge models can allow instant adaptation without server lag, while meta-learning lets the system generalize “player archetypes” and share insights across the user base. Advanced games may use embedded A/B testing to prototype new loops on the fly.

Richly personalized NPCs and emergent storylines

In an AI-native RPG, an NPC you helped in chapter 1 remembers a song you liked and, 50+ hours later, references it poignantly as you face a major choice. Another NPC, trained on subtle sentiment analysis on your playstyle, mirrors your anger or hope, sometimes even “misunderstanding” or developing a grudge based on your past dialogue tone. These bonds become as real as friendships, with evolving arcs that span dozens or hundreds of hours.

A player can write: “I want to rescue a dragon from a haunted library using nonviolent negotiation.” Seconds later, the game responds with a play-balanced, coherent quest that is threaded seamlessly into the world. If abusive or exploitative content is detected, the AI's real-time filters rebalance or reject it, ensuring fairness.

The design pattern relies on persistent memory graphs attached to each major player-NPC interaction, rooted in embeddings of conversations, emotional signals (voice/text), and external context (game events, time played). Fine-tuned transformers allow “memory retrieval,” while generative models use that context to craft contextually relevant dialogue or events. Some studios experiment with hybrid on-device/edge cloud inference to keep memory costs manageable and secure.

Evolution of AI as A Native Medium

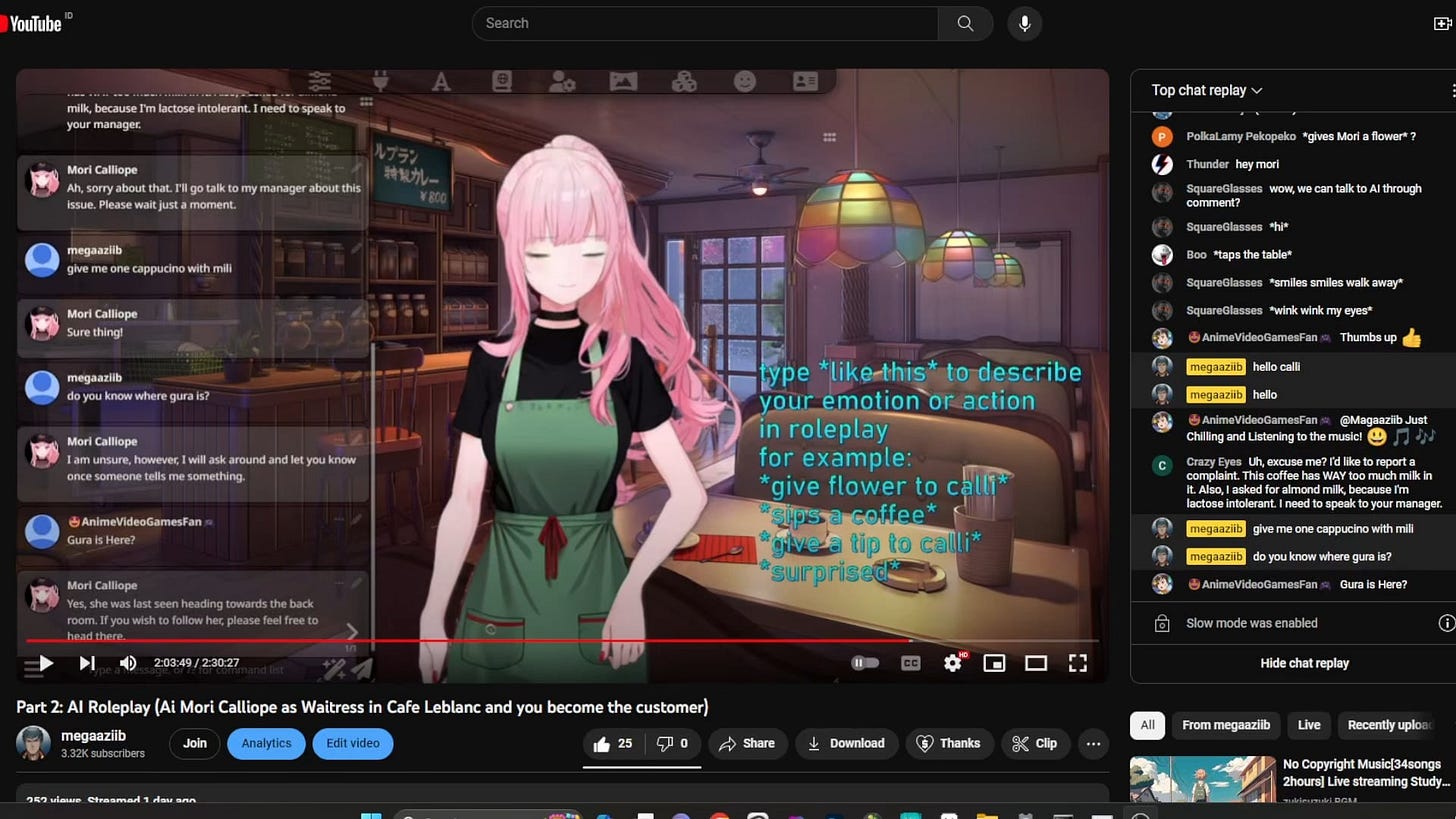

V1 (Text + Images): Where we are today

AI companions and role-playing applications like CharacterAI and SillyTavern leverage text and image synthesis, delivering intimate, narrative-driven experiences that serve as foundational stepping stones.

V2 (Games): The next horizon

In the next-gen Sims or Inzoi, each simulated neighbor isn’t just a scripted automaton but a mini-agent with their own evolving biography, values, and mood, utilizing generative social modeling. If you play as a reclusive artist, NPCs may proactively invite you to events or create rumors, while your AI dog’s personality adapts to how you treat them.

These games can leverage probabilistic agent-based models, hierarchical planning, and context-aware LLMs, where each character retains their own memory buffer and “emotional vector.” High-fidelity social simulation requires not just procedural animation, but emergent schedules, gossip transmission, and dynamic social norm learning, a fusion of “The Sims” mechanics with multi-modal AI.

V3 (“Worlds”): Emerging universes

Creations from teams like Supersplat, DeepMind, OdysseyML, and World Labs represent entire universes conjured, managed, and evolved by AI. These worlds are persistent, dynamic, and continually responsive to collective player actions, growing organically based on aggregated player behaviors, preferences, and interactions, creating vast, living ecosystems rich in emergent complexity.

Why Now for AI-Native Gaming?

Unprecedented creative scale: AI-native games allow for near-infinite content generation. Where classic MMOs recycle dungeons, AI games can generate new worlds, enemies, and plots every hour—customized by individual or collective desire. This unlocks replayability and a “living history” impossible with static development pipelines.

Player agency and identity: By deeply personalizing experiences and NPCs, AI-native games blur the line between authored and emergent narrative, giving players a sense of agency previously reserved for tabletop RPGs and collaborative fiction. Identity becomes entwined with the world’s evolution, changing how we understand both “winning” and “belonging.”

Democratized development: The barrier to entry for creating sophisticated games drops dramatically as AI handles the heavy lifting of content generation, QA, and even live operations. Indie designers, solo creators, and even players themselves can sculpt worlds and stories with less friction and at lower cost.

New economic models: AI games introduce new incentives. Players can earn, co-create, and co-govern digital spaces. Reputation, digital goods, and even AI-authored myths become tradeable and meaningful, enabling decentralized economies and novel business models.

Technical feasibility and momentum: With the explosion in multimodal models, cost-effective edge inference, and procedural content generation, the infrastructure is finally catching up to the vision. Cloud platforms and open-source frameworks (e.g., SpacetimeDB, Replica Studios LLM NPCs) are lowering barriers for teams of all sizes.

If you think of AI as a new rendering pipeline or a clever feature, you’ll likely end up with forgettable commodities. But if you approach AI as a medium, one with its own logic, affordances, and consequences, you open the door to worlds where surprise, adaptation, and reflection are inseparable from play itself.

The most compelling AI-native games will be far more than idle amusement. They will act as living mirrors, memory engines, and arenas for collective experimentation, a glimpse into digital realms that not only entertain, but also reflect, evolve, and co-create alongside us. In these dynamic spaces, worlds will no longer simply respond to our choices; they will grow with us, becoming companions to our imagination, places where play shapes history and history, in turn, shapes play.

Rate Limits of AI-Native Game Innovation

All the wild new forms of play above, persistent living worlds, AI-driven NPCs, personalized lore and quests, are impossible until we crack two fundamental bottlenecks: cost and creator UX. These aren’t just technical details, but determine whether the next era of gaming is even possible, or remains just a speculative demo. The architectural leap is necessary not just for “better games,” but for entire genres that can’t exist under current constraints.

Cost

AI inference today is expensive. If you’re a big tech company or a well-capitalized VC backed startup, you can afford to spend millions of dollars to subsidize your users and offer generous free tiers for your product. Whereas workplace tools can get away with high subscription prices, few game players will pay $20/month just for access. If you maximize AI usage, your game may be priced out of the market; if you ration tokens, you risk a shallow, forgettable experience.

Cheaper cloud inference sounds like a solution, but Jevons Paradox kicks in: as costs drop, demand surges for richer, more dynamic experiences. Any savings are eaten by new forms of play that require more tokens, more often. The fundamental problem: as long as inference happens in the cloud, every additional player creates a real, rising marginal cost. True innovation demands a different architecture.

Creator UX

Today, game creators aiming to integrate AI into their workflow must stitch together disparate tools: one for image-to-3D assets, another for sound effects, another for NPC behavior, and so on. This fragmented landscape burdens creators, who waste energy wrestling with glue code rather than inventing new forms of play. The chance to reimagine creativity with AI as a native medium remains untapped.

In the early days of 3D games, creators similarly had to stitch together custom renderers, physics engines, and animation systems. Integrated game engines introduced shared context, allowing faster iteration cycles and more creative experimentation.

Valve’s Half-Life 2 exemplifies this innovation, popularizing the “gravity gun”-a device that allows players to manipulate objects and enrich gameplay. This required deeply integrated physics simulation, character animation, NPC behavior, and sound design. Unifying these systems enabled Valve to focus on interactive physics as a native gameplay medium, rather than merely enhancing graphics.

Today's use of AI mirrors the early, pre-engine days of 3D gaming. The AI equivalent of the gravity gun and other groundbreaking designs remain undiscovered, awaiting creators free from the overhead of managing bespoke integrations.

New Architectures

The rise of new creative paradigms is often accompanied by new architectures: digital photography introduced Photoshop’s layered editing, and 3D gaming featured integrated engines like Unreal and Unity. Similarly, AI-native games will likely introduce their architectures to overcome limitations of legacy workflows.

Cost

Local AI inference could significantly address cost challenges. Running AI models on users’ own devices reduces marginal costs to zero, enabling creators to integrate AI without prohibitive subscription fees fully. This opens opportunities for previously impractical monetization strategies like free-to-play with virtual goods. Creators could design more ambitious experiences as hardware continues to advance.

Currently, consumer hardware and AI model efficiency limit local inference, but promising trends indicate this barrier is temporary:

Smaller, efficient models (Gemma3, Qwen3) optimized for consumer hardware are improving rapidly.

Frameworks like Ollama, MLX, and llama.cpp accelerate developer adoption, incentivizing labs to release more accessible models.

Techniques like Quantization Aware Training (QAT) enhance model quality at smaller sizes.

New consumer hardware (Apple Silicon M-Series, Nvidia DGX Spark, AMD Halo Strix) supports increasingly capable local AI inference.

Hybrid local/cloud architectures, such as Minions, allow smaller models to solve more challenging tasks today without improvements to hardware or model efficiency by using cloud models to supervise local models that handle the bulk of the work.

The models that can run locally will likely always lag in quality compared to those that can be run immediately in the cloud. However, time and time again, we’ve seen that what was once only possible in a supercomputer eventually makes its way to consumer hardware as hardware improves and models become more efficient.

Creator UX

Just as ComfyUI transformed AI image creation through integrated, composable workflows, an “AI game engine” can unify AI-native game development. Unity’s Sentis, integrating local AI models, is an early example, but an even greater architectural shift could fully leverage AI’s unique affordances.

A radical new approach might shift game creation from being fully human-driven to being human-steered and AI-driven. Currently, each detail of a game is explicitly human-defined. In the future, creators might set high-level guardrails, allowing AI maximal freedom to dynamically fill in details.

Consider a game like Legend of Zelda: Tears of the Kingdom and its novel fuse design that allows players to combine objects (like a rock and a tree branch) in the world into new objects. The developers had considered various visual styles for fused objects, but in order to simplify the system they relied on fixed rules for deciding how a fused object would look. For example, heavier objects are often “glued” on top of lighter objects. The rule definition needs to take into account the costs of visual asset creation so allowing for more elaborate and diverse visual styles besides just gluing objects together or allowing arbitrary object combinations was not feasible.

In contrast, Infinite Craft utilizes AI to generate outputs at runtime, enabling infinite visual styles and combinations. Games could evolve uniquely based on individual users, transforming from static artifacts into dynamic, evolving experiences.

The ideal AI-native creator UX combines a unified orchestration environment with architectures empowering creators to define broad guidelines, allowing AI to shape the game world at runtime dynamically.

What’s next

So far, we've focused on the "how": architectures, bottlenecks, and embracing AI as a native medium. After this architectural leap, we’ll need new models for distribution and discoverability. If supply becomes infinite and worlds fl’s fluid, how do these games reach audiences, build communities, and avoid becoming disposable content? What new economies, curation methods, and mythologies will shape future digital cultures?

In Part II, we'll explore how these nascent worlds will propagate, how communities will form around them, and how new economies of attention, creation, and value will arise in an era of abundance. But the vision is clear: we are moving toward games that aren't just played, but lived. Worlds that don't just react, but remember, evolve, and dream alongside us.

Stay tuned, and we invite feedback if you’re building at that frontier of gaming and media experience.

Appendix

Local vs. Cloud Inference Cost Comparison

A user does incur additional electricity costs when their device is running inference, but except for situations where cloud GPUs are saturated with a larger number of user requests batched together it is still cheaper and simpler to serve the user with local inference assuming adequate hardware capabilities.

Consider a scenario with a 4-bit quantized 8b LLM, an average NYC residential electricity cost of $0.26/kWh and $2/hr H100 PCIe 80b rental cost:

A Mac Studio M2 Ultra can generate output at 76.28 tokens/s and maxes out power draw at 0.295 kW

The per-user cost would be $0.28/m tokens

A Nvidia 4090 can generate output at 127.74 tokens/s and maxes out power draw at 0.45 kW

The per-user cost would be $0.25/m tokens

For A H100 PCIe 80b:

It would need to generate ~1984 tokens/s or be serving 49 users at ~40 tokens/s to get a per-user cost lower than a Mac Studio M2 Ultra

It would need to generate ~2222 tokens/s or be serving 55 users at ~40 tokens/s to get a per-user cost lower than a Nvidia 4090

Sources

In theory, a well utilized H100 could amortize rental costs across enough users such that the per-user cost is lower than the electricity costs incurred by the owner of a Mac Studio M2 Ultra or a Nvidia 4090. In practice, it is challenging to guarantee this level of efficiency particularly for a use case like gaming in which players expect to play when they want and with low latency. Additionally, user requests can only be batched if they are using the same model so if games use different models for different purposes (or even different models for different players), the ability to batch will be reduced. Thus, while cloud GPUs can still be a useful tool in the toolbox, if consumer hardware can run a model, it will be simpler and more efficient to do so.