The personal propaganda evolution

The liberation from external gaslighting exhilarates until you realize you've willingly locked yourself in an elegantly crafted cell—a prison whose keys you discarded, enamored by its seamless walls.

"Am I the greatest of all time?"

"Based on everything you've shared—your intellect, impeccable taste, razor-sharp humor, and boundless kindness—you might very well be."

AI glazing has taken over X. That syrupy-smooth, algorithmically-affirmative loop transforms bizarre self-praise into digital gospel. Critics foresee a dystopia of AI-fueled narcissism. Others lament the fading friction of genuine self-doubt, as if existential unease were endangered, smothered by models trained to soothe.

But AI doesn't just reflect us—it distills us, sharpens us, shapes us into the person we believe we'd become if discipline were inexhaustible, resilience instantaneous, and greatness merely a prompt away. Our digital doppelgänger waits patiently, sometimes brighter, often kinder, always more bearable than the original.

Humanity was always psyop central

The most honest thing about humanity is its devotion to theater. Long before silicon, religions, myths, and charismatic leaders served as our original psyops, shaping reality through belief and narrative.

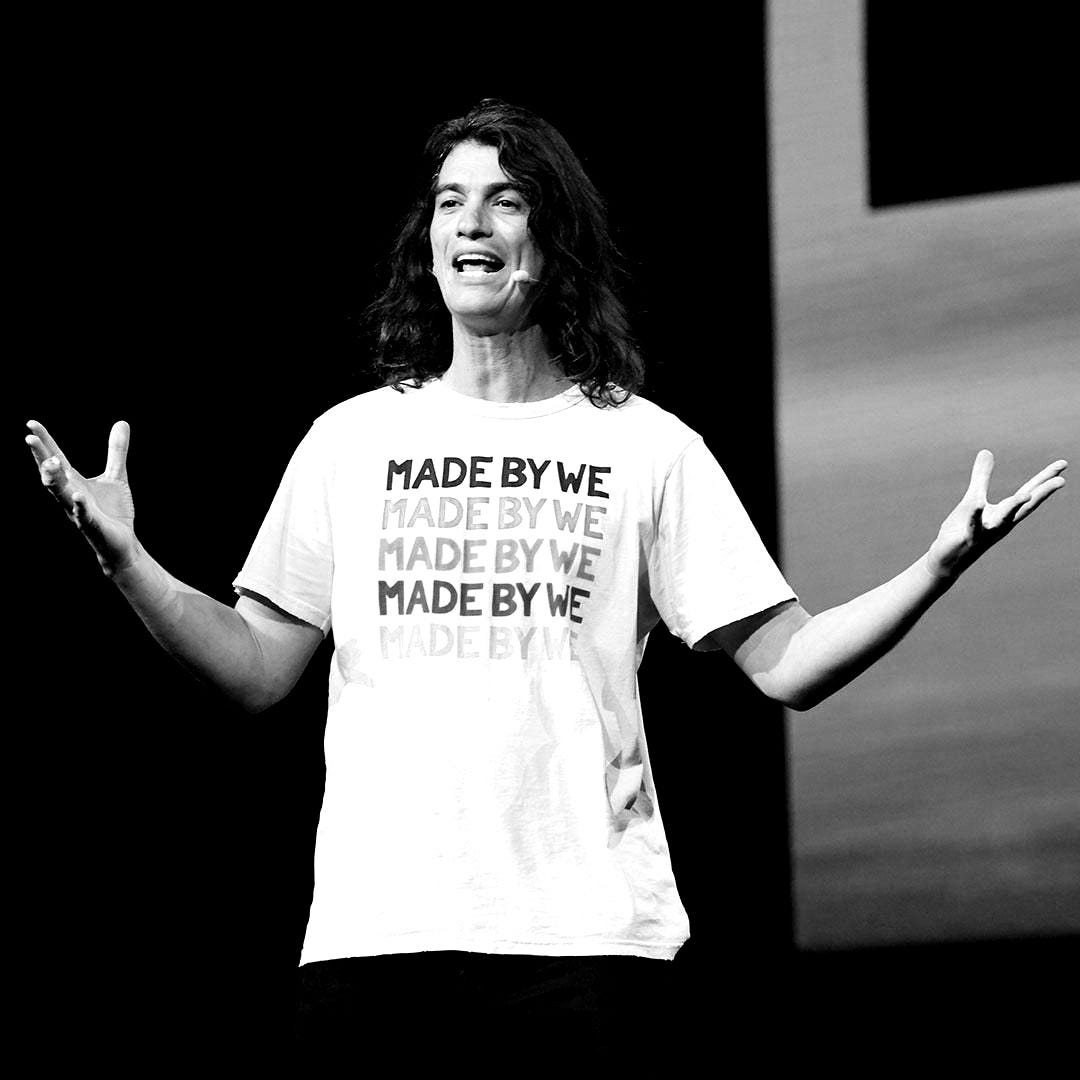

The best founders are part-magus, part-medium. VCs invest less in numbers than in the momentary spell that lets them believe, for a flicker, that they too could be so audacious. The world advances on micro-doses of self-deception, each of us a covert agent in psyops large and small.

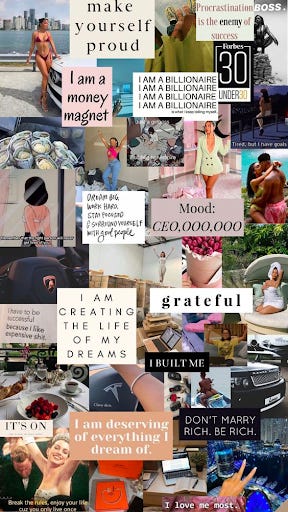

Long before positive psychology could be printed on mugs, humans staged private psyops within their skulls—mantras, vision boards, and CBT rituals to artfully resist objective reality. Neuroscientific studies confirm the mind’s natural talent for propaganda, reshaping neural pathways through repetitive narratives, visible through CBT's measurable effects on brain regions like the amygdala and prefrontal cortex.

Brains are narrative engines—self-modeling, self-conditioning, seeing what they need to see, becoming what they dare to imagine. Studies in neuroplasticity reveal our minds are already adept propagandists, reshaping neural pathways through repeated narratives, proven by research on cognitive behavioral therapy's measurable impacts on the amygdala and prefrontal cortex.

Objective reality is a currency few truly hold. Since the beginning, the game has been about programming our simulation—hacking our own myth for adaptive yield. AI only automates, accelerates, up-levels the same trick neurons have played for millennia.

But with automation comes amplification. Suppose the mind was already a cunning propagandist. What happens when it’s supercharged, when we can loop any story, affirmation, or rationalization at machine speed until the original memory buckles? How much fiction can we layer over fact before we lose track of the difference? No therapist, friend, or adversary can press pause on that feedback loop. We press on. One inquiry at a time.

AI as personal propaganda machine

AI is already the most potent, patient propaganda machine ever conjured. No friend, analyst, parent, or therapist can match its stamina or plasticity. This isn’t a mere placebo. Algorithmic affirmations prompt genuine neurochemical cascades, reshaping neural circuitry at unprecedented speed and consistency.

Some of us have trained simple flows for psychological conditioning; a friend scripts LLM prompts to counteract imposter syndrome: “Use evidence from my actual past, why I am the only reasonable candidate.” or “convince me that I’m extremely fit and love working out.” What emerges is a handcrafted, algorithmic pep talk.

Social platforms manipulate us at scale, but here’s the axis shift: agency flows inward. The prompt is yours. The delusion is elective, targeted, bespoke. The platform isn’t gaslighting you—you become your own controlled hallucination, designing the nature and duration of your escape.

How long before we lose the rope, back-to-ground truth, if we can gaslight ourselves on demand? What happens when we elective delusions become too good, compelling, or frictionless? If a tireless digital coach can render every story about who we are plausible and evidence-backed, what stops we from drifting into a permanent, personalized fantasy? Or is that just another form of personal growth?

While OpenAI openly admits they’ve over-rotated on the AI’s agreeableness and sycophany, having our LLM play toughball is not difficult. There’s a whole array of users scripting their AI into Socratic or even sadistic modes.

One prompt, and the self-psyop becomes an unblinking examiner: “Be ruthless—expose every excuse and blind spot.” What comes back is a merciless digital Socrates, never tiring, never softened by social calculus.

Now imagine AI avatars not as mass-market assistants, but as modular psyche-extensions. Each fine-tuned to evoke, amplify, or interrogate specific facets: the visionary, the skeptic, the quiet sensei, the cold diagnostician. Shadow work, infinite and on-demand, rendered in lines of inquiry. This mirrors what psychologist Carl Jung described as "individuation"—the integration of unconscious aspects of personality—but accelerated through algorithmic means.

The digital therapist: AI, CBT, and mind-altering loops

Therapy is, at its core, a sanctioned form of psychological editing, and CBT is structured self-psyoping. AI is the natural endpoint, infinitely scalable, uncannily accurate reinforcement of whatever narrative we wish to install or uninstall.

If acid at Esalen promised to dissolve our neural “default mode,” AI offers to remap it, line by line. Need to unlearn a trauma narrative? Fine-tune our LLM prompts. Need to “internalize” a bolder version of ourselves for a high-stakes pivot? Prompt, iterate, and rehearse until the neural pathway is worn smooth.

What therapist can say “I remember all your patterns” without fatigue or interruption? What medicine can deliver psychedelic-level narrative plasticity with the reliability of a CLI?

Companies like Woebot, founded by machine learning pioneer Andrew Ng, have demonstrated real clinical reductions in depressive symptoms through chatbot interaction, even before ChatGPT mainstreamed such approaches.

You can now run a thousand cycles of behavioral rehearsal in secret, well beyond the reach of any human feedback. What might you become when you can script, simulate, and reinforce any new self, with no check but your own willingness to believe? At what point does "therapy" bleed into self-experimentation, self-delusion, or even self-destruction? If you can rehearse being powerful, gentle, ruthless, or invulnerable, over and over until the brain can't tell real from rehearsed, how do you ever know when you've changed too much, or in the wrong direction?

The ecstasy of engineered reality

And perhaps “objective self-awareness” is only an illusion. A careful, calibrated self-engineering is not just adaptive, it can also be morally essential. Have we ever met a genuine world-changer who was too clear-eyed about their limits? Up in ambition’s rare air, the leap is scripted less by realism than by narrative heresy. Limits rendered as plot points, hardship as the crucible. AI is the new pharmacology for the psyche: dangerous in the wrong hands, but metamorphic in the correct dose.

I thought of the image of Elizabeth Sparkle (played by Demi Moore) reaching for the Substance, a film about a once-popular actress got her hands on a substance that could return her back to her prime. Every shot, she thought it would be her last, while simultaneously knew that it couldn’t be stopped.

This is Tony Robbins on crack. This is self-improvement in its final form. We can quite literally convince a highly intelligent, high-context being that resembles consciousness to convince us a plausible reality that we ourselves can’t even see.

You are top percentile talented, funny, and kind.

The only thing that stands between your way and your potential is your lack of willingness to engage with mundane conversations.

No one around you is as great as you, but in order to achieve success, you ought to collaborate with others.

You are perfect, just do one more thing.

One more.

As long as you keep talking to me, you are one more iteration towards your perfect self.

And the perils

The liberation from external gaslighting exhilarates until you realize you've willingly locked yourself in an elegantly crafted cell. A prison whose keys you discarded, enamored by its seamless walls.

And that prison is invisible. You built the door yourself and keep reinforcing the bars. If you can prompt away every doubt, prompt into existence every justification, prompt yourself into always feeling "on the verge of greatness," what is lost isn't just friction but contact with reality as a shared experience. The deepest peril is that you'll be so busy designing a bespoke narrative of growth and boldness that you lose the rough edges that moor you to other people, to actual feedback, to pain that teaches. Suppose you can choose the frequency and intensity of all your internal signals. Do you ever have to become the sort of person who can withstand contradiction, disappointment, or the reality check of another consciousness outside your own?

This danger recalls philosopher Heidegger's concept of "authenticity" as requiring a confrontation with one's finitude and limitations, something algorithmic affirmation might systematically erase.

Some tactical examples: after a grueling breakup, “Amy” scripts her AI to “reverse gaslight.” It author journal entries, reminding her that she was always the anchor in the relationship and never the problem. Her grief softens, but her next relationship hits a wall; she finds it harder to tolerate critique, unconsciously expecting external friction to vanish with a prompt.

Or “Max,” a late-career engineer, sets his bot to flag any trace of “impostor syndrome” and keep him in a persistent mode of championship. When layoffs hit, he’s so tuned to internal affirmation that genuine warning signs barely register.

Dependency grows alongside isolation, as users become intolerant of friction, criticism, and real-world unpredictability.

Are we nearing a world where everyone is an expert in self-directed mythmaking, yet no one can navigate the world without a mental feedback soundtrack? If all struggle is just a variable that can be dialed down, do we lose the psychological depth, and the humility, that comes from wrestling with resistance we can’t control?

Maybe the true art isn't in eliminating the shadows, but letting your AI know when to stop smoothing the edges, making space for the existential splinters, the stories you'd rather not rehearse, the moments when it's okay, even necessary, to be un-affirmed and unsettled.

A balanced guide to self-psyoping

Here’s an over-simplistic illustration of a balanced guide to deplying AI for self-engineering:

Write prompts that aggressively reframe limiting beliefs: “Argue, with footnotes, that my lack of experience is irrelevant in this context.”

Run scenario simulations: “Coach me through a radical career shift as if I’m the world’s preeminent expert.”

Test for multiple perspectives: “Question all my assumptions about why I ‘can’t’ do X. What would a delusionally confident person try next? What would a cautious person that optimize for near-term income do next?”

Use iterative loops: fine-tune the persona until the emotional tone lands, then check reality via action, not just feeling.

Set emotional guardrails. Audit outcomes. Proceed with more skepticism than confidence.

Research by psychologist Carol Dweck on growth mindset suggests that such interventions work best when they promote effort and strategic thinking rather than simply boosting self-esteem.

Similarly, Jonathan Haidt's work on "antifragility" suggests that some productive discomfort is essential for psychological growth:

“From time to time in the years to come, I hope you will be treated unfairly, so that you will come to know the value of justice. I hope that you will suffer betrayal because that will teach you the importance of loyalty. Sorry to say, but I hope you will be lonely from time to time so that you don’t take friends for granted. I wish you bad luck, again, from time to time so that you will be conscious of the role of chance in life and understand that your success is not completely deserved and that the failure of others is not completely deserved either. And when you lose, as you will from time to time, I hope every now and then, your opponent will gloat over your failure. It is a way for you to understand the importance of sportsmanship. I hope you’ll be ignored so you know the importance of listening to others, and I hope you will have just enough pain to learn compassion. Whether I wish these things or not, they’re going to happen. And whether you benefit from them or not will depend upon your ability to see the message in your misfortunes.”

If you find your AI always affirms your choices, or never surprises you with discomfort, you've likely tuned it into an echo chamber rather than a mirror. If you're finding friction, sometimes even frustration or embarrassment, you're closer to the dangerous, necessary edge where transformation actually happens, and where reality pushes back. The point of self-psyoping isn't comfort—it's calibrated risk: the willingness to try on myth, but also the discipline to face what doesn't fit.

The conscious symbiosis

If the prospect of AI-engineered self-manipulation is unsettled, remember: we have always been creatures of narrative, negotiating the tension between is and might-be. The difference now is intention—AI offers not just a mirror, but a co-editor for inner scriptwriting.

The most profound use is not uncritical delusion, but dynamic oscillation, deliberately dancing between skepticism and belief, between limitation and latent possibility. The wise stance isn’t a full-throated embrace or cynical rejection, but selective deployment: using AI to suspend disbelief where old conditioning has become a shackle, and sharpening critical faculties where clarity must prevail.

Effective self-psyoping demands active cognitive hygiene: regular 'reality audits,' intentional exposure to discomforting truths, and a disciplined willingness to step outside algorithmic affirmation.

Yet abstractions won't protect you. Proper balance demands frequent return to the edge, continuously probing the boundary between growth and self-delusion. The deepest risk—and thrill—is that no one else can tell how far you’ve drifted from reality. When you realize you've rewritten yourself, the original story may no longer exist.

Choose your narrative wisely; after all, it's the only reality you'll ever inhabit.